Turn on the television. Open your preferred streaming service. Get yourself a Coke. A demonstration of our time’s most important visual technology can be found right in your living room.

Path tracing has swept through visual media, propelled by an explosion in computing power over the last decade and a half.

It has propelled the art of animation to new heights by bringing big effects to the biggest blockbusters, casting subtle light and shadow on the most immersive melodramas, and bringing significant impacts to the biggest blockbusters.

More is on the way.

Path tracing is now available in real-time, allowing users to interact with interactive, photorealistic 3D environments, including dynamic light and shadow, reflections, and refractions.

What exactly is path tracing? Its central concept is simple: it connects innovators in the arts and sciences over a millennium.

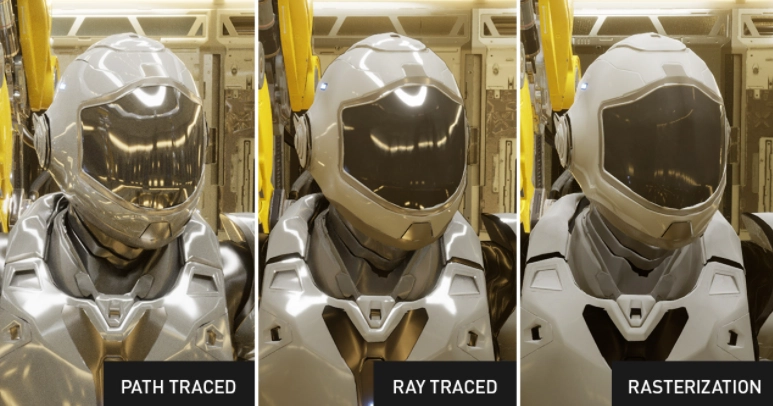

Difference Between Rasterization and Ray Tracing?

Let’s start by defining some terms and how they’re used today to create interactive graphics, which are graphics that react in real-time to user input, such as in video games.

The first is rasterization, a technique that creates an image from a single point of view. It has always been at the heart of GPUs. NVIDIA GPUs today can produce over 100 billion rasterized pixels per second. Rasterization is therefore ideal for real-time graphics, such as gaming.

Compared to rasterization, ray tracing is a more powerful technique. It can determine what is visible from many different points, in many different directions, rather than being limited to what is visible from a single point. NVIDIA GPUs have provided specialized RTX hardware to accelerate this complex computation since the NVIDIA Turing architecture. A single GPU today is capable of tracing billions of rays per second.

The ability to trace all of those rays allows for a much more accurate simulation of how light scatters in the real world than is possible with rasterization. However, we must still address the issues of how we will simulate light and how we will transfer that simulation to the GPU.

What is Ray Tracing?

Understanding how we got here will help us better answer that question.

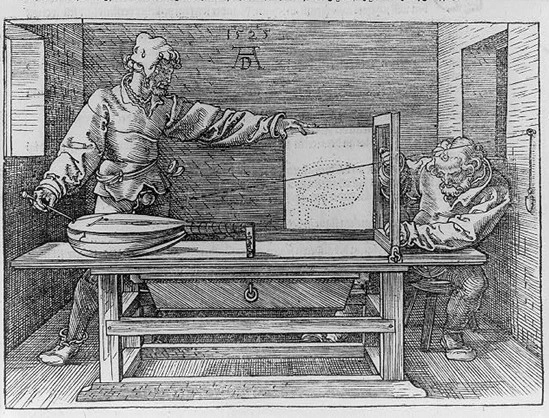

NVIDIA vice president of graphics research David Luebke likes to start the story in the 16th century with Albrecht Dürer, one of the most important figures of the Northern European Renaissance, who replicated a 3D image on a 2D surface using string and weights.

Dürer dedicated his life to bridging the gap between classical and contemporary mathematics and the arts, achieving new levels of expressiveness and realism.

Dürer was the first to describe ray tracing in his Treatise on Measurement, published in 1538. The simplest way to grasp the concept is to look at Dürer’s description.

Consider how the world we see around us is illuminated by light.

Imagine tracing those rays of light backward from the eye to the objects that light interacts with using a piece of string like the one Dürer used. That’s how ray tracing works.

More than 400 years after Dürer’s death, IBM’s Arthur Appel demonstrated how ray tracing could be applied to computer graphics by computing visibility and shadows in 1969, more than 400 years after Dürer’s death.

A decade later, Turner Whitted was the first to demonstrate how this concept could capture reflection, shadows, and refraction, showing how a seemingly simple idea could enable much more sophisticated computer graphics. In the years that followed, progress was quick.

Robert Cook, Thomas Porter, and Loren Carpenter of Lucasfilm detailed how ray tracing could incorporate many standard filmmaking techniques previously unattainable in computer graphics, such as motion blur, depth of field, penumbras, translucency, and fuzzy reflections, in 1984.

Caltech professor Jim Kajiya’s crisp, seven-page paper, “The Rendering Equation,” introduced the path-tracing algorithm, which makes it possible to represent how light accurately scatters throughout a scene, and connected computer graphics with physics via ray tracing.

What is Path Tracing?

Kajiya took an unlikely source of inspiration for path tracing: the study of radiative heat transfer or how heat spreads throughout an environment. He developed the rendering equation, which describes how light travels through the air and scatters from surfaces, using ideas from that field.

Although the rendering equation is simple, it is challenging to solve. Complex computer graphics scenes are common today, with billions of triangles not uncommon. The rendering equation cannot be solved directly, which led to Kajiya’s second crucial innovation.

Kajiya demonstrated how to solve the rendering equation using statistical techniques: even if it can’t be solved directly, it can be solved along the paths of individual rays. Photorealistic images are possible if it is solved along the path of enough rays to approximate the lighting in the scene accurately.

And how does the rendering equation get solved along a ray’s path? Ray tracing is a technique for calculating the distance between two points.

Monte Carlo integration is a statistical technique used by Kajiya that dates back to the 1940s when computers were first introduced. Developing better Monte Carlo algorithms for path tracing is still a work in progress; NVIDIA researchers are at the forefront of this field, regularly publishing new techniques that improve path tracing efficiency.

Kajiya outlined the fundamental techniques that would become the standard for generating photorealistic computer-generated images by combining these two ideas — a physics-based equation for describing how light moves around a scene — and using Monte Carlo simulation to help choose a manageable number of paths back to a light source.

His approach transformed a field dominated by a variety of disparate rendering techniques into one that could use simple, powerful algorithms to reproduce a wide range of visual effects with stunning levels of realism because it mirrored the physics of how light moved through the real world.

Path Tracing Makes it to the Big Screen

Path tracing was regarded as an elegant technique — the most accurate approach known — in the years following its introduction in 1987, but it was utterly impractical. Even though the images in Kajiya’s original paper were only 256 by 256 pixels, rendering them took over 7 hours on an expensive mini-computer that was far more powerful than most people’s computers.

However, as computing power grew exponentially as a result of advances that allowed chipmakers to double the number of transistors on microprocessors every 18 months, Moore’s law — which described the exponential increase in computing power as a result of advances that allowed chipmakers to double the number of transistors on microprocessors every 18 months — the technique became more and more practical.

Ray tracing was first used to enhance computer-generated imagery in movies like 1998’s A Bug’s Life, and it has since been used in an increasing number of films. Monster House, the first entirely path-traced film, stunned audiences in 2006. Arnold, a software co-developed by Solid Angle SL (since acquired by Autodesk) and Sony Pictures Imageworks, was used to render it.

The film was a box office success, grossing over $140 million worldwide. It also opened people’s eyes to the possibilities of a new generation of computer animation. More movies began to use the technique as computing power improved, resulting in images often indistinguishable from those captured by a camera.

The issue is that rendering a single image still takes hours, and large clusters of servers, known as “render farms,” must continuously render images for months to create a complete movie. Bringing that to real-time graphics would be a huge step forward.

In terms of gaming, how does this look?

The idea of path tracing in games was unthinkable for many years. While many game developers would agree that path tracing would be desirable if it provided the performance required for real-time graphics, the performance was so far off that path tracing appeared unattainable.

However, as GPUs have become faster and faster, and now that RTX hardware is widely available, real-time path tracing is becoming a reality. Games have started by putting ray tracing to work in a limited way, similar to how movies began by incorporating some ray-tracing techniques before switching to path tracing.

At the moment, a growing number of games are ray traced in some way. They combine ray-tracing effects with traditional rasterization-based rendering techniques.

So, in this context, what does path traced mean? It could imply a combination of techniques. The primary ray could be rasterized, and then the lighting for the scene could be path traced.

Path tracing uses ray tracing as one component of a larger light simulation system to simulate the true physics of light. Rasterization is the same as shooting a single set of rays from a single point and stopping when they hit something. Ray tracing takes this a step further, casting rays in any direction from multiple points.

This means that all lights in a scene are sampled stochastically — using Monte Carlo or other techniques — for both direct and global illumination, lighting rooms, or environments with indirect lighting.

Rather than tracing a ray back through a single bounce, as Kajiya suggested, rays would be traced back through multiple bounces, presumably back to their light source.

A few games have already done so, and the results are incredible.

Microsoft has released a Minecraft plugin that uses path tracing.

Quake II, the classic shooter often used as a sandbox for advanced graphics techniques, can now be full path traced thanks to a new plugin.

More needs to be done. And game developers will need to know that their customers have the necessary computing power to enjoy path-traced gaming.

Gaming is the most challenging visual computing project of all, as it necessitates both high visual quality and the ability to interact with fast-twitch gamers.

Expect the techniques developed here to permeate all aspects of our digital lives.

Path Tracing Future

Putting path tracing to work is the next logical step as GPUs become more powerful.

With tools like Autodesk’s Arnold, Chaos Group’s V-Ray, or Pixar’s Renderman — and powerful GPUs — product designers and architects can generate photorealistic mockups of their products in seconds to collaborate more effectively and avoid costly prototyping.

Ray tracing has been proven by architects and lighting designers who use it to simulate how light interacts with their designs.

Video games are the next frontier for ray tracing and path tracing as GPUs offer more computing power.

In 2018, NVIDIA unveiled NVIDIA RTX, a ray-tracing technology that gives game developers real-time, movie-quality rendering.

NVIDIA RTX supports ray-tracing through various interfaces, thanks to a ray-tracing engine that runs on NVIDIA Volta and Ampere architecture GPUs.

Furthermore, NVIDIA has teamed up with Microsoft to provide full RTX support through Microsoft’s new DirectX Raytracing (DXR) API.

Since then, NVIDIA has continued to develop NVIDIA RTX technology as more game developers create games that use real-time ray tracing.

Real-time path tracing is also supported in Minecraft, transforming the blocky, immersive world into immersive landscapes awash in light and shadow.

More is on the way thanks to more powerful hardware and the proliferation of software tools and related technologies.

As a result, digital experiences such as games, virtual worlds, and even online collaboration tools will have the cinematic quality of a Hollywood blockbuster.

Don’t get too comfortable. What you’re seeing from the comfort of your living room couch is just a taste of what’s to come in the world.